Neuromorphic processors aim to provide vastly more power-efficient operations by modelling the core workings of the brain

As artificial intelligence (AI) continues to evolve, it is expected that AI at the edge will become a more significant portion of the current tech market. Known as the AI of Things or AIoT, various processor vendors like Intel and Nvidia have launched AI chips for such lower-power environments, respectively, with their Movidius and Jetson product lines.

Computing at the edge further aids in lower latency than sending information to the cloud. Ten years ago, there were questions about whether software and hardware could be made to work similar to a biological brain, including incredible power efficiency.

Today, the same question has been answered with a yes with advancement in technology, but the challenge now is for the industry to capitalise on neuromorphic technology development and answer tomorrow’s regressive computing challenges.

The Crux Of Neuromorphic Computing

Neuromorphic computing differs from a classical approach to AI, which is generally based on convolutional neural networks (CNNs), as this technology mimics the brain much more closely through spiking neural networks (SNNs).

Although neuromorphic chips are generally digital, they tend to work based on asynchronous circuits, meaning there is no global clock. Depending upon the specific application, neuromorphic can be ordered to magnitude faster and requires less power. Neuromorphic computing complements CPU, GPU, and FPGA technologies for particular tasks, such as learning, searching and sensing, with extremely low power and high efficiency.

Researchers have lauded neuromorphic computing’s potential, but the most impactful advances to date have occurred in academic, government and private R&D laboratories. That appears to be ready to change.

A report by Sheer Analytics & Insights estimates that the worldwide market for neuromorphic computing will be growing at 50.3 per cent CAGR to $780 million over the next eight years. Mordor Intelligence, on the other hand, aimed lower with $111 million and a 12 per cent CAGR to reach $366 million by 2025.

Forecasts vary, but enormous growth seems likely. The current neuromorphic computing market is majorly driven by increasing demand for AI and brain chips to be used in cognitive and brain robots. These robots can respond like a human brain.

Numerous advanced embedded system providers are developing these brain chips with the help of AI and machine learning (ML) that acts as thinks and responds as the human brain.

This increased demand for neuromorphic chips and software for signal, data, and image processing in automotive, electronics, and robotics verticals is projected to further fuel the market.

The need for potential use cases such as video analysis through machine vision and voice identification has also been projected to aid market growth. Major players for the development include Intel, Samsung, IBM and Qualcomm.

Researchers are still trying to find out where practical neuromorphic computing should go first; vision and speech recognition are the most likely candidates. Autonomous vehicles could also benefit from such human-like learning without human-like distraction or cognitive errors.

BrainChip’s Akida architecture features event-based architecture. It supports on-chip training and inference and various sensor inputs such as vision, audio, olfactory, and innovative transducer applications.

Akida is already featured in a unique product: the Mercedes EQXX concept car, displayed at the CES this year, where it was used for voice control to reduce power consumption by up to 10x. Internet of Things (IoT) and opportunities for Edge range from the factory floor to the battlefield.

Neuromorphic computing will not be directly replacing the modern CPUs and GPUs. Instead, the two types of computing approaches will be complementary, each suited for its sorts of algorithms and applications.

The Potential Underneath

Neuromorphic computing came to existence due to the pursuit of using analogue circuits to mimic the synaptic structures found in brains.

Our brain excels at picking out patterns from noise and learning. A neuromorphic edge CPU excels at processing discrete, transparent data. For the same reason, many believe neuromorphic computing can help unlock unknown applications and solve large-scale problems that have put conventional computing systems in trouble for decades. Neuromorphic processors aim to provide vastly more power-efficient operations by modelling the core workings of the brain.

In 2011, HRL announced that it had demonstrated its first “memristor” array, a form of non-volatile memory storage that could be actively applied to neuromorphic computing. Two years later, HRL’s first neuromorphic chip, “Surfrider” was released.

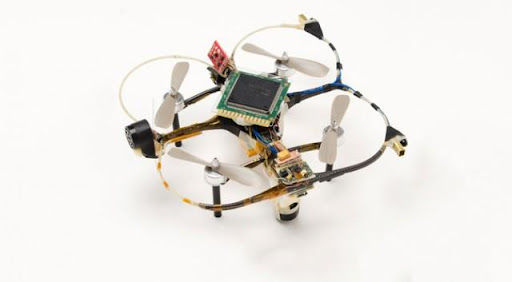

As reported by the MIT Technology Review, Surfrider featured 576 neurons and functions on just 50 mW of power. Researchers tested the built chip by adding it into a sub-100-gram drone aircraft loaded with several optical, infrared, and ultrasound sensors and sent the drone into three rooms.

The drone was observed to have “learned” the entire layout and objects present in the first room through sensory input. Later, using this teaching, it could “learn on the fly”, even if it was in a new room or could recognise having been in the same room before.

Today, most neuromorphic computing work is incorporated by using deep learning algorithms that perform processing on CPUs, GPUs, and FPGAs. None of these is optimised for neuromorphic processing. However, next-gen chips such as Intel’s Loihi were designed exactly for these tasks and can achieve similar results on a far smaller energy profile. This efficiency will prove critical for the coming generation of small devices needing AI capabilities.

Deep learning feed-forward neural networks (DNNs) underperform neuromorphic solutions like Loihi. DNNs are linear, with data moving from input to output straight. Recurrent neural networks (RNNs) are more similar to the working of a brain, using feedback loops and exhibiting more dynamic behaviour, and RNN workloads are where chips like Loihi shine.

Samsung also announced that it would expand its neuromorphic processing unit (NPU) division by 10x, growing from 200 employees to 2000 by 2030. Samsung said at the time that it expected the neuromorphic chip market to grow by 52 per cent annually through 2023.

One of the future challenges in the neuromorphic space will be defining standard workloads and methodologies for benchmarking and analysis. Benchmarking analysis applications such as 3DMark and SPECint have played a critical role to understand the technology, aiding adopters match products to their needs.

Currently, Neuromorphic computing remains deep in the R&D stage. There are virtually only a few substantial commercial offerings in the field. Still, it’s becoming clear whether specific applications are well-suited to neuromorphic computing or not. Neuromorphic processors will be faster and more power-efficient for extensive workloads than any modern, conventional alternatives.

CPU and GPU computing, on the other hand, will not be disappearing due to such developments; neuromorphic computing will be beside them to handle challenging roles better, faster, and more efficiently than anything we have seen before.

If you liked reading this, you might like our other stories

AI, Explain Yourself!

Understanding AIoT Analysis At The Edge