Brand developers are looking at voice assistants to boost their customer experience. Datatechvibe pits Alexa and Google Assistant against each other on various factors, including context recognition and learning capabilities.

Voice assistants are the lynchpins of smart devices, and our world will have over eight billion Intelligent Voice Assistants (IVAs) by 2024. Now, most industry sectors are leveraging IVAs to boost customer experience (CX).

For instance, Fero provides an ecosystem of Transport Interactive Assistants (TiAs) in the logistics industry. It listens, understands, talks, and messages over various messaging platforms.

Some other brands are collaborating with consumer-familiar assistants like Siri, Alexa, and Google Assistant to enhance their CX. Platforms like ebay and Kroger have been using the GET-THING and OPEN_APP intent. When a user says, “Hey Google, find vintage gramophone on eBay,” it would open the eBay application with the results.

Amazon’s Alexa and Google’s Google Assistant are two of the pioneers in the world of voice assistants. While the former is powered through Natural Language Processing (NLP) technology, Google Assistant is controlled by Artificial Intelligence (AI).

Several companies are already integrating either of the two into their offered devices. Disney partnered with Amazon to build on Alexa’s technology and create a new voice assistant for its smart devices. Echo users will be able to buy the “Hey, Disney” assistant from the Alexa Skills store next year.

In other news, tech giants are always looking to enhance the AI-based capabilities in voice assistants. IBM Watson Machine Learning Accelerator solution collaborated with DeepZen to develop deep learning and neural networks to recognise emotions on the text and generate human-like speech.

Learning and understanding the technology used by the tech giants in Alexa, Google Assistant, and others is essential to understand the gaps, flaws, and produce more innovative technical capabilities in AI voice assistants.

The technology of voice-based search began with Siri in 2011. The tech and business leaders then wouldn’t have imagined it to become hyper mainstream worldwide. Juniper research predicts that by 2024, consumers will be interacting with voice assistants on over 8.4 billion devices, overtaking the world’s population.

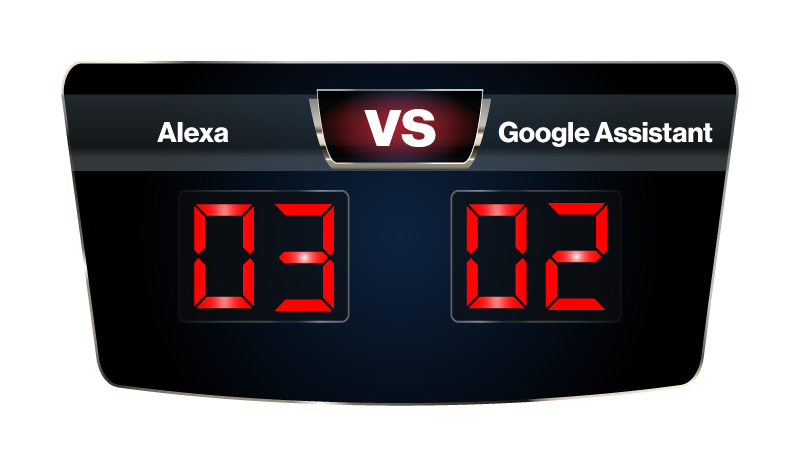

But which assistant is better for brand developers to make better business investments and purchase decisions? Alexa or Google Assistant? To deliver better insights, Datatechvibe pits the two voice assistants against each other on various factors beyond their offered features.

The Showdown

Privacy

Privacy concern in AI assistants is a familiar battleground, for both, but especially Alexa. For years, there have been a several reports about Alexa spying on conversations. But Amazon has been frantically upgrading its privacy features to mend its reputation. Recently, Amazon added a new feature to Alexa, wherein, users had the option to choose and save particular voice recordings, while others are permanently deleted.

Another feature added to Alexa devices is that all user voice commands are processed within the Alexa device, which means audio clips will no longer be sent to the cloud for processing.

On the other hand, Google Assistant is designed to wait on standby mode until it hears its prompt ‘Hey Google’, and it does not record conversations on this mode. In other cases, users need to manually change settings under Web & App Activity to record and save conversations. Moreover, a recent privacy update allows users to quickly delete any record of the previous command with “Hey Google, that was not for you.” This puts Google Assistant miles ahead of Alexa. But let’s dig deeper.

Alexa does allow users to ask for privacy settings reviews and get more details on how to manage all the data. Amazon claims that an “extremely small” number of Alexa recordings are annotated to help make its speech recognition systems better, same with Google Assistant. But in 2020, Google had confirmed that the third-party workers who were analysing the language data had leaked over 1,000 private conversations. Google had to pause all language review operations.

Although Alexa has had multiple lawsuits over privacy concerns, Google Assistant has been under the limelight of late. Recently, a federal judge issued an order that Google had to face a lawsuit about Google Assistant recording user conversations that stemmed from false activation commands. These conversations are then also analysed to target advertising.

Both Alexa and Google assistant give the user the option of allowing or restricting recordings but due to imperfect strategies, they are both in the consumer blacklist in terms of privacy. Seems like a draw.

But a recent report indicates that Alexa collected the most data as compared to the other available AI assistants. And the more data collected, the more privacy concerns pop up. This puts Google Assistant an inch ahead of Alexa.

Winner: Google Assistant

Learning Capability

For a voice assistant to quickly learn is a gamechanger. As better learning capabilities, also mean enhanced, personalised services for users.

Amazon has offered consumers the tools to train Alexa on voice and sound recognition. It can learn the user’s preferences with a simple command, “Alexa, learn my preferences.” In the past, Amazon used thousands of samples to train Alexa about sounds, but with this new feature, six to 10 custom samples provided by users can do the trick.

Meanwhile, giving fierce competition, Google wanted the Assistant to not only recognise names of people but accurately articulate them. Google Assistant learns to pronounce names based on the user’s own pronunciation. The Assistant is also capable of memorising the pronunciation without recording the user utterance – a privacy bonus.

Active learning technology in speech learning and NLU in Alexa allows it to recognise areas it needs to improve itself and learn from the user. It substantially reduces its error rates. Transfer learning involves the transfer of knowledge from a related task that has already been learned to a new task with similar demands. Introducing this technique in Alexa via deep learning caused a 25 per cent reduction in error rate in a year.

Machine learning (ML) technology has also been expanding the capabilities of Alexa since 2018. For instance, Alexa learned to identify the context of one question and the next, and understand follow up questions, without using a wake word again. Offering the same feature, Google assistant came up with continued conversations in the same year, 2018.

Well, there is a lot more learning power in Alexa than Google Assistant at the moment. Moreover, Ring devices powered by Alexa can be trained to notice when a door that is supposed to be closed is open. This gives Alexa an edge over Google Assistant.

Winner: Alexa

Context Recognition

It is vital for AI virtual assistants to not only listen and recognise words, but also comprehend the user context.

Alexa has been successful with its context recognition abilities for a long time. For instance, if the user asks for a news report, it would speak like a news reporter. What’s more interesting is if the user whispers the wake word, Alexa will understand that the user is probably having a headache or next to someone who does not want to be disturbed, and whisper back.

Context recognition is the main goal of the Assistant too. The Assistant has been reconstructed with enhanced NLU models to better recognise the intent behind the user’s commands. “Maybe you’ve got a 10-minute timer for dinner going at the same time as another to remind the kids to start their homework in 20 minutes. You might fumble and stop mid-sentence to correct how long the timer should be set for, or maybe you don’t use the exact same phrase to cancel it as you did to create it. Like in any conversation, context matters and Assistant needs to be flexible enough to understand what you’re referring to when you ask for help,” stated Google.

Rebuilding Assistant’s NLU model to understand context better, Google is also upgrading its ML technology powered by BERT, which also powers Search. The company hopes to make Assistant process words in relation to all the other words in a sentence, rather than evaluating the words one-by-one in the spoken order.

Alexa is technically not an AI assistant, and it runs on automatic speech recognition (ASR) technology that can convert spoken words into text. And with the addition of natural language understanding (NLU), Alexa understood context a tad better. But with Google’s ML and BERT update, we believe the scales have slightly tipped.

Winner: Google Assistant

Voice and Sound Recognition Ability

Meanwhile, analysing verbal speech is challenging as there are multiple variable contexts that differ from one user to another.

Building on the Alexa Guard feature that lets the voice assistant identify sounds such as, breaking of a glass or a fire alarm, users can teach Alexa to differentiate between the sound of the doorbell, the whistle from a cooker, the microwave oven alarm, or the sound of running water.

With the new Echo fourth-generation, powered by the AZ1 Neural Edge processor, Alexa gets smarter and more conversational. Amazon introduced an all-neural speech recognition model that can process speech faster, making Alexa more responsive. When a user says, “Alexa, join our conversation”, wake words won’t be required, and the voice assistant will be able to listen to multiple people at the same time.

Additionally, Alexa can wait a few seconds longer for a person to finish speaking. This functionality was specially added for people with speech impairment and let Alexa feel more helpful and inclusive.

How does Google Assistant fare? It has a feature called Quick Phrases that lets users use specific phrases to call upon Google Assistant without saying any traditional wake words. With this opt-in feature, the voice assistant can automatically put an alarm on snooze when it hears the word “stop” or “snooze”; it can also “decline” or “answer” calls. Nevertheless, Google warned users that the feature could accidentally connect calls if it hears an unrelated command of “Answer” or “Call”. It loses some points here, certainly.

Being a voice-first experience AI assistant, Alexa seems to check more boxes in this round.

Winner: Alexa

Knowledge base

Considering Google search engine’s extensive search results, Assistant has an expected advantage in this segment. Or so we thought? While research indicates that Google Assistant can provide users with prompt answers, and Alexa could leave users with a “Sorry, I don’t know that one” response, our personal experiment proved otherwise. We asked a set of questions to Alexa and Google Assistant in their respective mobile applications.

Some general knowledge questions were answered by both, and for some, Google Assistant laid out a lazy response of “Here are some results I found on the web.” But Alexa actually found a website with a satisfactory answer. For instance, when we asked Google Assistant, “How many steps would it take to walk from Dubai to Egypt?” it came up with “Here is what I found”, along with a list of links. However, when the same question was asked to Alexa, we heard, “Dubai is 3,460,000 steps from Egypt, as the crow flies.”

Moreover, Alexa had a recent exciting Halloween update. If a user quotes a line from a selection of horror films, Alexa can instantly understand the referred movie. For instance, the user can watch the 2001 indie film Donnie Darko by saying “Alexa, when will the world end?”

Google Assistant has a bigger knowledge bank, no doubt, but it seems to make the user do the hard work sometimes.

Winner: Alexa

And the champion is…

*Drumroll*

While Google Assistant and Apple’s Siri are tied for first place in terms of market share with 36 per cent, followed by Alexa at 25 per cent, Alexa takes the lead here.

Seven years ago, Alexa could perform only 13 tasks, and today the number hits as high as 130,000. Recently, Amazon developers also stated that they were working on enhancing Alexa’s learning and context recognition capabilities to an extent that users would not have to speak to it as much. Meanwhile, we will have to wait and see how Google Assistant’s Quick Phrases capability works for users. Would it bring convenience or distress?

In the meantime, the learning capabilities, voice recognition technology, and a consumer friendly impression, makes Alexa the safer bet for brand technology developers.

As voice AI and ML continues to improve across all assistants, the competition is such that one day Alexa is at the top, and the next wave of technological innovations will put Siri on the pedestal. A greater leap to the top by Google Assistant or even Bixby someday might surprise you.

If you liked reading this, you might like our other stories