In April, Jensen Huang, Nvidia’s CEO, recently named one of Time magazine’s top 100 most influential people, addressed a virtual audience at The GPU Technology Conference 2021 (GTC21) from his kitchen. He had done it before but this year it got a bit sci-fi. For 14 seconds of the 2-hour presentation, Huang’s photorealistic digital clone, and his kitchen, popped up on screen, and no one knew.

The Silicon Valley company, which started as a company that powered video games, and now designs graphics processing units (GPUs), showed off its stunning tech prowess with the latest in artificial intelligence (AI).

The company’s AI-powered chips are now in drones, robots, self-driving cars and supercomputers. A key reason for their spread is how rapidly the chips can handle complex tasks like image, facial and speech recognition.

From its roots in the gaming industry, Nvidia shifted to AI — and how!

Apparently, it all started with a business plan inspired by a science-fiction novel — Neal Stephenson’s breakthrough novel Snow Crash, published in 1992. The novel, a cyberpunk exploration of then-futuristic technologies: mobile computing, virtual reality, wireless Internet, digital currency, smartphones, and augmented-reality headsets, influenced Huang to conjure a metaverse that he described as “a virtual world that is a digital twin of ours.” It led to the success story called Nvidia.

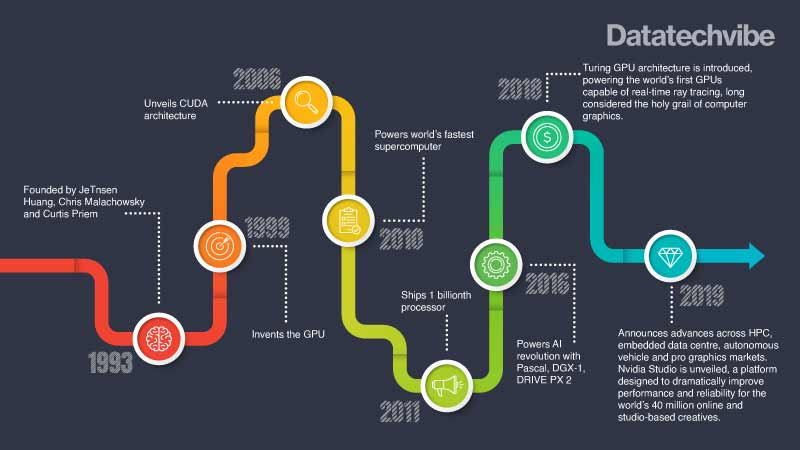

In 1993, Huang, and Chris Malachowsky and Curtis Priem, founded Nvidia, setting out initially to help PCs offer visual effects to rival those of dedicated video game consoles. At the time, there were more than two dozen graphics chips companies, a number that soared to 70 three years later. By 2006, Nvidia was the only independent still operating. It held its own in the chip industry as it retooled its products and strategy and gradually separated itself from the competition to become the clear leader in the GPU-accelerator cards used in gaming PCs.

The company bet on CUDA (compute unified device architecture) as the computing landscape was undergoing broad changes. Using its chips and software it developed as part of the CUDA effort, the company gradually created a technology platform that became popular with many programmers and companies.

Its pursuit of innovation also brought diversification. From 2008 to 2018, Nvidia expanded into additional markets (automotive) and became a significant player in system-on-a-chip (SoC) technology, parallel processing and AI. Undoubtedly, it’s one of the most important technological enterprises globally. Its chips are an important component of many of the world’s most powerful computers, powering AI, drug discovery and autonomous vehicles. Its products, from data centres and cloud computing, to gaming and visualisation, are powering the digital economy.

Also Read: Top 5 Machine Learning Trends

All about GPUs innovation and demand

An undisputed leader in the GPU space. Nvidia’s GPUs have exploded in the world of AI in recent years. By the mid-2000s, its GPU hardware was widely accepted to have set the standard for digital content creation in product design, movie special effects and gaming.

And, so far, things are going swimmingly for the successor to both Volta — designed to better run the mathematical operations used in deep learning networks — and Turing — real-time ray tracing.

Nvidia Ampere architecture and A100 GPU is an upgrade from the Volta architecture. The A100 GPUs can unify training and inference on a single chip, whereas in the past, Nvidia’s GPUs were mainly used for training. This allows Nvidia a competitive advantage by offering both training and inferencing. Whether it’s IoT devices littering your home, or even self-driving cars, more and more computing power is needed. And Nvidia Ampere architecture is a huge part of that.

Nvidia is also collaborating with the open-source community to bring end-to-end GPU acceleration to Apache Spark 3.0, an analytics engine for big data processing used by more than 500,000 data scientists worldwide. Building on its strategic AI partnership with Nvidia, Adobe is one of the first companies working with a preview release of Spark 3.0 running on Databricks.

Meanwhile, Nvidia made its entry into high-performance computing, focusing on companies that will need number-crunching power, such as oil companies doing deep-sea seismic analysis, Wall Street banks modelling portfolio risk, and biologists visualising molecular structures to find drug target sites.

In June, Microsoft Azure launched the ND A100 v4 VM series, its most powerful virtual machines for supercomputer-class AI and HPC workloads, which is powered by Nvidia A100 Tensor Core GPUs and Nvidia HDR InfiniBand.

Nvidia collaborated with Azure to architect this new scale-up and scale-out AI platform, which brings together Nvidia Ampere architecture GPUs, Nvidia networking technology and the power of Azure’s high-performance interconnect and virtual machine fabric to make AI supercomputing accessible to everyone.

In July, Nvidia launched the UK’s fastest supercomputer — Cambridge-1, the world’s first collaborative, industry-wide system dedicated to accelerating healthcare and digital biology research using a powerful combination of AI and simulation.

No surprise, the company has been extending its leadership in supercomputing. The latest top 500 list shows that Nvidia powers 342 of the world’s top 500 supercomputers.

Also Read: Person-based Advertising Revolutionising Marketing

Data centre with AI

Even though the gaming segment still takes a sizable proportion of Nvidia’s business, the data centre has become equally important in size. To re-architect on-premises data centres, public clouds and edge computing installations, using the company’s strong position in AI architectures, Nvidia has been moving away from merely providing GPUs to become what Huang has called a “data centre-scale company” that sells optimised AI systems powered by the company’s GPUs and bundled with a growing portfolio of software.

Nvidia’s acquisition of Mellanox Technologies has resulted in new products entering the data centre market, like Nvidia’s new BlueField data processing units.

Public cloud services providers such as Alibaba, Amazon Web Services, Facebook, Google, IBM and Microsoft use Nvidia GPUs in their data centres, prompting Nvidia to launch its GPU Cloud platform, which integrates deep learning frameworks, software libraries, drivers and the operating system.

The company has also ramped up its software portfolio, which now includes offerings like Nvidia AI Enterprise that can be sold by partners.

In addition to data centres, more recently, Nvidia tapped the parallel processing it used for its GPUs to do non-graphics compute tasks. That turned into a huge application in AI, autonomous cars, and robotics are ably helping Nvidia showcase the power of its GPUs and other technologies in the AI world, as its chips are becoming the brains of computers, robots, and self-driving cars.

It is working with several hundred partners in the automotive ecosystem, including automakers, automotive research institutions, HD mapping companies, and startups, to develop and deploy AI systems for self-driving vehicles. Its DrivePX can understand in real-time what’s happening around the vehicle, precisely locate itself on an HD map, and plan a safe path forward. It combines deep learning, sensor fusion, and surround vision to change the driving experience.

To enhance the field of robotics, Nvidia developed Isaac Sim — a virtual robot that helps make other robots. Built on the Omniverse platform, Isaac is trained using AI algorithms like reinforcement learning, after which its virtual brain is downloaded into Jetson — Nvidia’s AI supercomputer — to build a new robot.

The new Isaac simulation engine creates better photorealistic environments, streamlines synthetic data generation and domain randomisation to build ground-truth datasets to train robots in applications from logistics and warehouses to factories of the future.

Nurturing tech startups

AI adoption is growing across industries, and startup funding has, of course, been booming. To better connect venture capitalists with promising AI startups, it introduced the Nvidia Inception VC Alliance to fast-track the growth for thousands of AI startups around the globe by serving as a critical nexus between the two communities.

The VC Alliance is part of the Nvidia Inception program, an acceleration platform for over 7,500 startups working in AI, data science and HPC, representing every primary industry and located in more than 90 countries. Besides, Nvidia’s Deep Learning Institute provides developers, data scientists and researchers with practical training on the use of the latest AI tools and technology.

“Artificial intelligence is transforming our world,” wrote computer scientist Andrew Ng in the story on Huang in Time magazine. “The software that enables computers to do things that once required human perception and judgment depends largely on hardware made possible by Jensen Huang.” Undeniably, Huang is driving the surge.

As a company, Nvidia learns the needs of the evolving technology and its enterprise side, continuously innovating and designing AI graphics processors and AI chips that can deliver the extra computing power that clients need in an algorithm-driven world.

No surprise, Nvidia is now worth $555 billion and employs 20,000 people. The excitement about AI applications has turned 28-year-old Nvidia into one of the technology sector’s hottest companies.

Although, Nvidia’s $40 billion acquisition of Arm from SoftBank Group has come under a cloud, with both the British and European competition watchdogs opening “in-depth” investigations into the deal, the American chipmaker’s advantage is that it has a big head start on Intel, AMD and other rivals. But, it can’t relax. Amazon, Google, and Microsoft are designing their own chips. The market is swarming.