The potential of generative AI is much bigger than any of us can imagine today. From healthcare to manufacturing to retail to education, AI is transforming entire industries and fundamentally changing how we live and work. At the heart of all that innovation are developers, pushing the boundaries of possibility and creating new business and societal value even faster than many thought possible. Trusted by organisations worldwide with mission-critical application workloads, Azure is where developers can securely, responsibly, and confidently build with generative AI.

This year, Microsoft will dive deep into the latest technologies across application development and AI enabling the next wave of innovation. First, it’s about bringing you state-of-the-art, comprehensive AI capabilities and empowering you with the tools and resources to build with AI securely and responsibly. Second, it’s about giving you the best cloud-native app platform to harness the power of AI in your business-critical apps. Third, it’s about the AI-assisted developer tooling to help you securely ship the code only you can build.

Microsoft has made announcements in all key areas to empower organisations to lead in this new era of AI.

Bring your data to life with generative AI

Generative AI has quickly become the generation-defining technology shaping how we search and consume information every day, and it’s been wonderful to see customers across industries embrace Microsoft Azure OpenAI Service. In March, we announced the preview of OpenAI’s GPT-4 in Azure OpenAI Service, making it possible for developers to integrate custom AI-powered experiences directly into their applications. Today, OpenAI’s GPT-4 is generally available in Azure OpenAI Service, and we’re building on that announcement with several new capabilities you can use to apply generative AI to your data and to orchestrate AI with your systems.

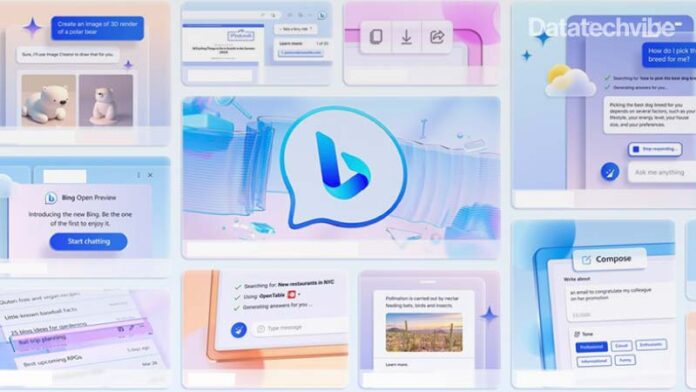

Azure OpenAI Service Build graphic

We’re excited to share our new Azure AI Studio. With just a few clicks, developers can now ground powerful conversational AI models, such as OpenAI’s ChatGPT and GPT-4, on their data. With Azure OpenAI Service on your data, coming to public preview, and Azure Cognitive Search, employees, customers, and partners can discover information buried in the volumes of data, text, and images using natural language-based app interfaces. Create richer experiences and help users find organization-specific insights, such as inventory levels, healthcare benefits, and more.

To further extend the capabilities of large language models, we are excited to announce that Azure Cognitive Search will power vectors in Azure (in private preview) with the ability to store, index, and deliver search applications over vector embeddings of organisational data, including text, images, audio, video, and graphs. Furthermore, in private preview, support for plugins with Azure OpenAI Service will simplify integrating external data sources and streamline the process of building and consuming APIs. Available plugins include plugins for Azure Cognitive Search, Azure SQL, Azure Cosmos DB, Microsoft Translator, and Bing Search. We are also enabling a Provisioned Throughput Model, which will soon be generally available in limited access to offer dedicated capacity.

Customers are already benefitting from Azure OpenAI Service today, including DocuSign, Volvo, Ikea, Crayon, and 4,500 others. Learn more about what’s new with Azure OpenAI Service.

We continue to innovate across our AI portfolio, including new capabilities in Azure Machine Learning, so developers and data scientists can use the power of generative AI with their data. Foundation models in Azure Machine Learning, now in preview, empower data scientists to fine-tune, evaluate, and deploy open-source models curated by Azure Machine Learning, models from Hugging Face Hub, as well as models from Azure OpenAI Service, all in a unified model catalogue. This will provide data scientists with a comprehensive repository of popular models directly within the Azure Machine Learning registry.

We are also excited to announce the upcoming Azure Machine Learning prompt flow preview, providing a streamlined experience for prompting, evaluating, tuning, and operationalising large language models. With the prompt flow, you can quickly create prompt workflows that connect to various language models and data sources. This allows for building intelligent applications and assessing the quality of your workflows to choose the best prompt for your case. See all the announcements for Azure Machine Learning.

It’s great to see momentum for machine learning with customers like Swift, a member-owned cooperative that provides a secure global financial messaging network. It uses Azure Machine Learning to develop an anomaly detection model with federated learning techniques, enhancing global financial security without compromising data privacy. We cannot wait to see what our customers build next.

Run and scale AI-powered, intelligent apps on Azure

Azure’s cloud-native platform is the best place to run and scale applications while seamlessly embedding Azure’s native AI services. Azure gives you the choice between control and flexibility, with a complete focus on productivity regardless of your choice.

Azure Kubernetes Service (AKS) offers complete control and the quickest way to develop and deploy intelligent, cloud-native apps in Azure, data centers, or at the edge with built-in code-to-cloud pipelines and guardrails. We’re excited to share some of the most highly anticipated innovations for AKS that support the scale and criticality of applications running on it.

To give enterprises more control over their environment, we are announcing long-term support for Kubernetes that will enable customers to stay on the same release for two years—twice as long as what’s possible today. We are also excited to share that starting today, Azure Linux is available as a container host operating system platform optimised for AKS. Additionally, we are now enabling Azure customers to access a vibrant ecosystem of first-party and third-party solutions with easy click-through deployments from Azure Marketplace. Lastly, confidential containers are coming soon to AKS as a first-party supported offering. Aligned with Kata Confidential Containers, this feature enables teams to run their applications in a way that supports zero-trust operator deployments on AKS.

Azure lets you choose from a range of serverless execution environments to build, deploy, and scale dynamically on Azure without the need to manage infrastructure. Azure Container Apps is a fully managed service that enables microservices and containerised applications to run on a serverless platform. We announced, in preview, several new capabilities for teams to simplify serverless application development. Developers can now run Azure Container Apps jobs on demand and schedule applications and event-driven ad hoc tasks to asynchronously execute them to completion. This new capability enables smaller executables within complex jobs to run in parallel, making it easier to run unattended batch jobs along with your core business logic. With these advancements to our container and serverless products, we are making building intelligent cloud-native apps on Azure seamless and natural.

Integrated, AI-based tools to help developers thrive

Making it easier to build intelligent, AI-embedded apps on Azure is just one part of the innovation equation. The other equally important part is about empowering developers to focus more time on strategic, meaningful work, which means less toiling on tasks like debugging and infrastructure management. We’re investing in GitHub Copilot, Microsoft Dev Box, and Azure Deployment Environments to simplify processes and increase developer velocity and scale.

GitHub Copilot is the world’s first at-scale AI developer tool, helping millions of developers code up to 55% faster. Today, we announced new Copilot experiences built into Visual Studio, eliminating wasted time when starting a new project. We’re also announcing several new capabilities for Microsoft Dev Box, including new starter developer images and elevated integration of Visual Studio in Microsoft Dev Box, which accelerates setup time and improves performance. Lastly, we’re announcing the general availability of Azure Deployment Environments and support for HashiCorp Terraform and Azure Resource Manager.

Enable secure and trusted experiences in the era of AI

Regarding building, deploying, and running intelligent applications, security cannot be an afterthought—developer-first tooling and workflow integration are critical. We’re investing in new features and capabilities to enable you to implement security earlier in your software development lifecycle, find and fix security issues before code is deployed, and pair with tools to deploy trusted containers to Azure.

We’re pleased to announce GitHub Advanced Security for Azure DevOps in preview soon. This new solution provides the three core features of GitHub Advanced Security into the Azure DevOps platform, so you can integrate automated security checks into your workflow. It includes code scanning powered by CodeQL to detect vulnerabilities, secret scanning to prevent the inclusion of sensitive information in code repositories, and dependency scanning to identify vulnerabilities in open-source dependencies and provide update alerts.

While security is at the top of the list for any developer, using AI responsibly is no less important. For almost seven years, we have invested in a cross-company program to ensure our AI systems are responsible by design. Our work on privacy and the General Data Protection Regulation (GDPR) has taught us that policies aren’t enough; we need tools and engineering systems that help make it easy to build with AI responsibly. We’re pleased to announce new products and features to help organisations improve accuracy, safety, fairness, and explainability across the AI development lifecycle.

Azure AI Content Safety, now in preview, enables developers to build safer online environments by detecting and assigning severity scores to unsafe images and text across languages, helping businesses prioritise what content moderators review. It can also be customised to address an organization’s regulations and policies. As part of Microsoft’s commitment to responsible AI, we’re integrating Azure AI Content Safety across our products, including Azure OpenAI Service and Azure Machine Learning, to help users evaluate and moderate content in prompts and generated content.

Additionally, the responsible AI dashboard in Azure Machine Learning now supports text and image data in preview. This means users can more easily identify model errors, understand performance and fairness issues, and explain a wider range of machine learning model types, including text and image classification and object detection scenarios. In production, users can continue to monitor their model and production data for model and data drift, perform data integrity tests, and make interventions with the help of model monitoring, now in preview.