Fractal’s new model outperforms competitors like o1-mini and o3-mini, with performance close to o4-mini levels.

Fractal, the Mumbai-based AI firm, has introduced a new open-source large language model, Fathom-R1-14B. The model provides mathematical reasoning performance better than o1-mini and o3-mini, and is close to o4-mini levels, with a post-training price of only $499.

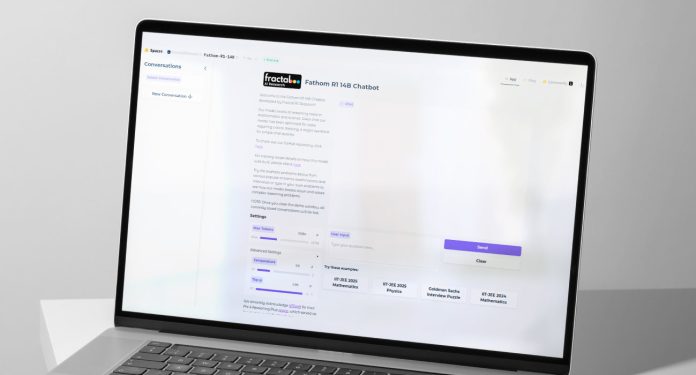

The model can be tested on Hugging Face, and the codebase is hosted on GitHub. The model is licensed under the MIT license, along with datasets and training recipes.

Created as one of the components in a planned project to develop India’s first large reasoning model under the IndiaAI mission, Fathom-R1-14B is a 14-billion-parameter model that is inherited from Deepseek-R1-Distilled-Qwen-14B.

“We recommended developing India’s first large reasoning model under the IndiaAI mission. We recommended developing three models (a small one, a mid-sized one and a large one with 70 billion parameters),” Fractal CEO Srikanth Velamakanni said. “This is just a tiny proof of what’s possible,” he added.

ALSO READ: Can Cognitive AI Inspire Future Model Development?

On olympiad-level tests AIME-25 and HMMT-25, Fathom-R1-14B attains 52.71% and 35.26% Pass@1 accuracy, respectively. Given additional inference-time compute (cons@64), the accuracy increases to 76.7% and 56.7%.

“It achieves performance comparable with closed-source o4-mini (low) in terms of cons, all within a 16K context window”, the company added

The model was post-trained with supervised fine-tuning (SFT), curriculum learning, and model merging. “We perform supervised fine-tuning on carefully curated datasets using a specific training approach, followed by model merging”, the company said.

Fractal has also introduced a separate variant, Fathom-R1-14B-RS, achieved similar results using a combination of reinforcement learning and SFT, costing $967.

Last year, the firm rolled out Vaidya.ai, a multi-modal AI platform aimed at providing free and equitable healthcare support. In the meantime, Sarvam, the startup chosen to develop India’s foundation LLM under the IndiaAI Mission, just launched Sarvam-M, a 24-billion parameter open-weights hybrid language model based on Mistral Small.

ALSO READ: Google’s New Imagen AI Outperforms DALL-E On Text-to-Image Generation Benchmarks