DeepMind trained the model with 604 different tasks, where It outperformed the expert level in 450 of them, at least 50 per cent of the time

Artificial General Intelligence (AGI) is today considered the ultimate AI achievement by many industry experts. An AGI model possesses the ability to learn, plan, reason, represent knowledge and communicate in natural language. In the latest paper, A Generalist Agent, DeepMind’s research team has introduced an AI take on this concept.

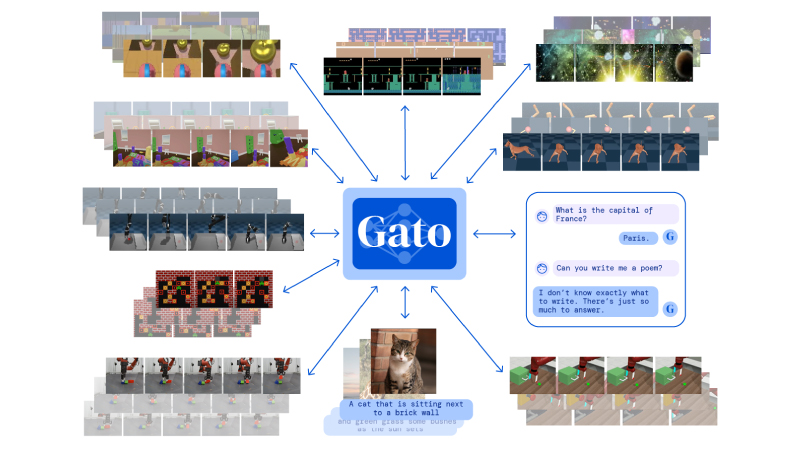

Known as Gato, the presented single general-purpose agent can perform over 600 diverse tasks, ranging from captioning images to stacking blocks by simulating a real robot arm and navigating simulated 3D environments, while using the same network mesh with the same weights. Gato’s novel transformer sequence model can even beat human players in Atari games. DeepMind trained this generalist with 604 different tasks. It outperformed the expert level in 450 of them, at least 50 per cent of the time.

What is Gato?

As per Deepmind, this model acts as a transformer and as an agent, created with the idea of mixing Transformers with progressive multi-task reinforcement learning agents. DeepMind researchers started with the hypothesis that training an agent generally capable of a large number of tasks is possible. A general agent adapts to requirements with extra data to succeed at an even more significant number of tasks. They noted that a general-purpose agent provides substantial advantages, such as removing the need for customising policies for each field, increasing the amount and diversity in training data, and achieving continuous improvements through data, compute, and model scale. A general-purpose agent can also be regarded as a step toward machine learning’s ultimate AGI goal.

Gato can be trained and sampled the same way a standard large-scale language model can. The primary components of Gato’s network design are a parameterised embedding function that converts tokens to token embeddings, and the sequence model produces a distribution over the next discrete token.

While any broad sequence model can be used to anticipate the next token, they chose a transformer for its simplicity and scalability. Gato employs a 1.2 billion parameter decoder-only transformer with 24 layers, a 2048 embedding size, and an 8196 post-attention feedforward hidden size. This model is 100x smaller than the GPT-3 model or 1000x smaller than the most significant NLP models in terms of parameters. It only requires 1.2 billion parameters compared to GPT-3, which requires 175 billion. The added advantage? There is no need for re-training or fine-tuning for all other tasks.

Gato is designed to be trained on a variety of relevant data and functions as a multi-modal, multi-task, multi-embodiment generalist model that can adapt and succeed at tasks with varying modalities, observations and action specifications and handle new tasks given minimal additional data.

Image Source: DeepMind

Image Source: DeepMind

“With a single set of weights, Gato can engage in dialogue, caption images, stack blocks with a real robot arm, outperform humans at playing Atari games, navigate in simulated 3D environments, follow instructions, and more,” says lead author Scott Reed and colleagues in their paper, A Generalist Agent, posted on Arxiv.

Challenges for future AGI

Today, AI conglomerates such as OpenAI and DeepMind are focused on their research for robust AI systems with AGI. However, many experts say that these models are not completely capable of AGI. They say that the companies are so determined on AGI that they are stagnating further research and killing the hopes of experiencing AGI as a whole. Both OpenAI and DeepMind have been working on the AGI technology through GPT-3 and Gato for a long time, but are not able to thoroughly address the underlying issues and complex problems for AGI, such as an AI model learning new things without any training data.

Gato currently shows potential to perform better in the consumer market, but there are no viable points for a complacent AGI. GPT-3 and Gato need to inculcate hard filter methods to eradicate faults and flaws like bias, racism, and abusive language to produce consistent outcomes.

AGI is known for powering intelligent machines to perform intellectual tasks and study the human mind to solve any complex problem with cognitive computing functionalities. But as of now, the tech companies are facing critical challenges of AGI, such as issues in learning human-centric capabilities – sensory perception, motor skills, problem-solving, and human-level creativity – .lacking the future AGI direction.

The progress in generalist RL agents has been incredible in the past few years, mainly from Deepmind. Deepmind’s exhaustive research seems to be moving the needle closer to human-level intelligence – slowly but steadily gaining abilities to manage cognitive tasks.

The novelty of the Gato is its ability to perform diverse tasks. It is exciting to think about the evolving capabilities of these models. Such progress will also certainly arouse more discussion and research around AI misuse. But, it won’t be surprising to see a much larger version published soon from OpenAI, DeepMind, or Google.