Thomas Amilien, CEO and Co-founder of Clay AIR, a company specialising in gesture control and touchless interaction solutions, throws light on how human-machine interaction is transforming and slowly making traditional input systems go obsolete.

Human-machine interactions don’t just happen in big industrial manufacturing plants. They happen every day at home, in the car, at work, and play.

While smart devices have been gaining popularity since the early 2000s, it’s the convergence of 5G, AI and computer vision that is ushering in a new generation of seamless and intuitive user interfaces.

We are only at the beginning of a movement towards ‘Zero-UI’ (zero user interface), where we engage with machines through touch-free controls such as voice, gestures, and biometrics.

Computer Vision Enables Machines to Understand our World

Cameras are more than a lens when combined with powerful software that processes and interprets images garnered from a device’s camera.

This is how computer vision works. Programs powered by advanced algorithms enable cameras in-car displays, mobile phones, augmented reality and virtual reality devices to track and interpret our facial features for identification and actions such as hand, eye and body movements that correlate to specific controls.

As these HMIs (human-machine interactions) become more commonplace, multimodal Zero-UI is already beginning to replace touchscreens, controllers, mouses and even keyboards.

Machine Learning: The Brain Behind the Lens

Computer vision does very little by itself, but it’s how machines understand and interpret our world when coupled with advanced machine learning algorithms.

Let’s take the example of computer vision-based gesture recognition. For a user interface to interpret a “thumbs-up” gesture as a “yes”, the program will need to be designed and trained with millions of 3D images of hands and process these to understand which ones are a thumbs-up, and therefore, means “yes.”

Algorithms can be trained to detect and classify many gestures, first, by understanding which object is a hand as it has specific characteristics (5 fingers, knuckles and a palm), then identifying when the hand executes a specific shape that we call a gesture, and finally, triggering the action that corresponds to the given gesture.

To recognise a ‘thumbs-up’, however, the machine learning model behind the lens performs many calculations:

- It first has to recognise the hand in the field of view and identify where it is in space while it’s moving

- Then, identify where the features of the hand are located, and follow them in space

- Lastly, the model has to identify a modification in the shape or the combination of the features tracked to understand the ‘thumbs-up’ gesture

All of these steps require computing power. This is when 5G and distributed processing becomes essential.

An example of hand tracking and gesture recognition on Nreal glasses using Clay AIR technology

Also Read: ECDC 2021: Unlocking Business Acceleration, Agility and Resilience with Hybrid Cloud

5G and The Cloud

Vast quantities of data processing are required for machine learning algorithms to perform image recognition. This takes up far more battery power than linear algebra and can be costly on battery consumption.

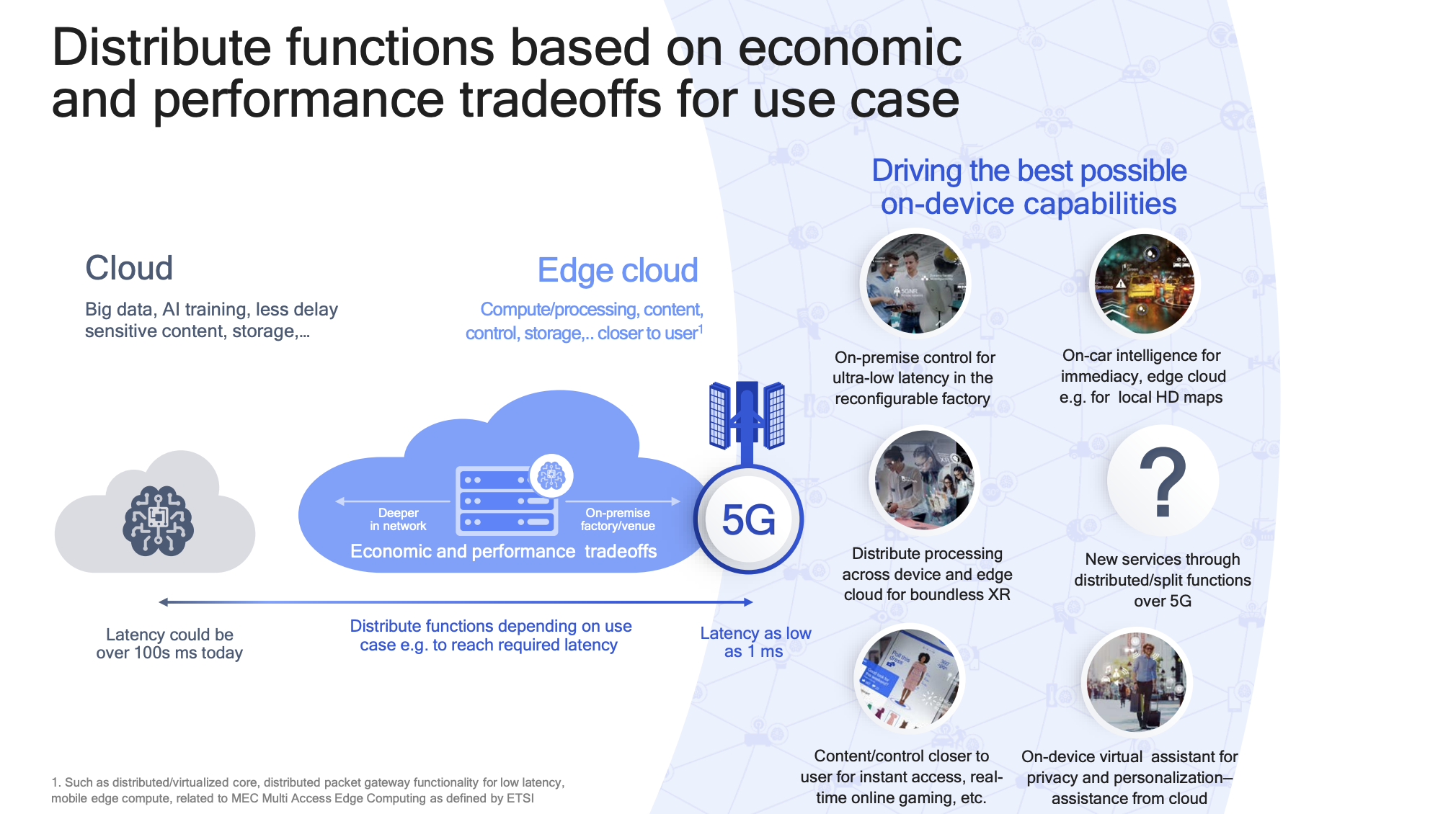

Hugo Swart, Qualcomm’s head of XR, showcases in the image below how 5G is an enabler of cloud computing and a solution for next-generation high-speed data processing:

Hugo Swart (Qualcomm, Inc.): 5G, Distributed Processing & Technology Advancements (source)

Hugo Swart (Qualcomm, Inc.): 5G, Distributed Processing & Technology Advancements (source)

For example, imagine you’re recording a movie on your phone, at 30 frames per second. Each second, at a camera refresh rate of 30 frames per second, your smartphone generates 30 images.

Now, imagine a machine learning model plugged into the smartphone to detect gestures.

Every second, the ML model will process 30 images, looking for thousands of pieces of information (is this a hand, where is it, where is the thumb, is this a thumb up, etc.)

Every time the camera refreshes, the machine learning algorithm analyses a picture, which is a matrix of up to 3840×2160 pixels (more than 8 Million pixels!). Within a second, the algorithm will have processed 30 times this amount of data.

While microprocessor technology has rapidly evolved, the advancements have been unable to keep pace with the quantity and quality of data we use, especially as we begin to move into the spatial web. This is a 3D world that includes fully interactive, persistent and multi-user augmented and virtual reality experiences.

Enter 5G and cloud computing combined.

Qualcomm defines 5G as a wireless technology that delivers multi-Gbps, peak data speeds, ultra-low latency, reliable connectivity, massive network capacity, increased availability, and a more consistent user experience.

5G powers cloud computing, with a vision to process data through high-speed wireless networks rather than directly on a device. In the near future, data will be compressed, sent from our device to a nearby data centre where calculations are made, and the results relayed directly to our devices at speeds near-instantaneous.

Augmented and virtual reality particularly will benefit from computer vision and machine learning backed by 5G and cloud computing: headsets and smart glasses will be light, untethered, and interconnected with intelligent buildings and the IoT that surrounds us.

The Opportunity for Consumers and Enterprises

Better visual content, faster and lightweight devices will add an entirely new dimension of interactivity with the world.

We’ve already been able to glimpse what this may look like as Nreal has released consumer and enterprise-focused AR glasses, featuring hand tracking that enables users to interact freely with the device through simple and intuitive gesture controls for a Zero-UI.

Lenovo’s ThinkReality A6 enterprise augmented reality headset, supporting hand tracking, offers a streamlined solution where companies can build, deploy, and manage applications and content on a global scale. Lenovo also recently announced the ThinkReality A3 lightweight, intelligent glasses, designed to increase collaboration between remote teams.

Another example is Microsoft’s Mesh, a new mixed-reality platform powered by their volumetric solution, Azure, that allows people in different physical locations to join collaborative and shared holographic experiences on many kinds of devices.

These are just a few devices powered by the intersection of 5G, cloud computing and computer vision.

Beyond AR and VR, in-car gesture controls, menu controls, driver monitoring systems that monitor drowsiness and attention, touchless interactions for public display interfaces and the intuitive controls of robots and smart devices are beginning to become a part of the invisible technology.

Also Read: Why the Cybersecurity Tech Industry Needs to Address the Gender Gap

Ethical Considerations for a New Wave of Interactivity

Referring back to the example above, where a machine learning model processes 1800 images for a one minute video, this new wave of interactivity is not without its data concerns.

Machine learning algorithms don’t ask express permission for data to be captured, so the best solution is for data to be anonymised or immediately discarded. Technology providers will have different privacy approaches, and finding the right partner can develop long-lasting consumer trust.

We also talked above about how massive datasets are required to train an algorithm. This is crucial in the development of a program that recognises and responds to a diverse user base. In the example of hand tracking, data needs to include the hands of people from diverse ethnicities, gender and age to create inclusive technology.

The process of designing and training an algorithm includes intrinsic biases as well. Every person, depending on their background, identity, culture, ethnicity, gender, etc. carries biases. Transparent processes have to be put in place, from recruitment, data selection, training and testing methodologies.

By establishing strong diversity principles today, we can create technology that is equally accessible to all, while respecting people’s privacy.