Developers observed that as more complexity is added to conversational AI bots, it becomes more challenging to meet the real-time response expectation

Today’s AI systems, from Siri to Alexa to Google, are designed with a single goal: to understand us. The latest AI techniques can understand several types of text with human-level accuracy by performing hundreds of billions of calculations as fast as a blink of an eye.

According to Gartner, in 2022, chatbots are expected to cut business costs by $8 billion, and by 2023, both businesses and consumers will save 2.5 billion hours through chatbots. By 2024, the overall market size for chatbots worldwide is predicted to exceed $1.3 billion. However, Acquia’s recent survey, which analysed responses from more than 5,000 consumers and 500 marketers in North America, Europe, and Australia, found that 45 per cent of consumers find chatbots “annoying”.

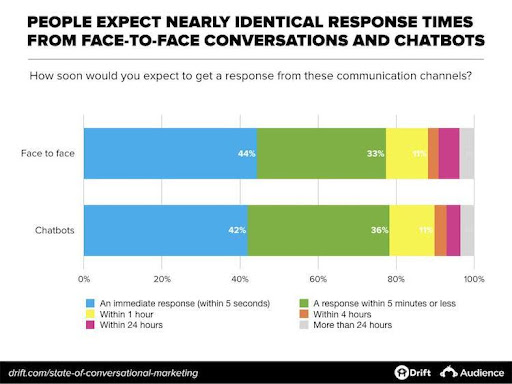

The reason? Some chatbots are too slow to respond.

Is it limited to speed?

Research by Salesforce shows that 69 per cent of consumers prefer chatbots with quick responses to communicate with brands. Nevertheless, developers observed that when more complexity is added to conversational AI bots, meeting the real-time response expectation becomes more challenging. Although processing becomes more complex when text is part of a larger conversation, considering context is essential to interpret what the user means and decide how to respond. Advanced chatbots like Facebook’s BlenderBot 2.0 have foreshadowed such problems, with far less frustrating interactions with AI.

It takes a vast network of ML models for conversational AI to determine what to say next, where each solves a small piece of the puzzle. One model might consider the user’s location, another calculate the history of interaction and feedback that similar responses have received. Every model adds milliseconds to the system’s latency. In a live environment, an immediate response is potentially demanded. For conversational AI bots, every potential improvement is weighed against the desire for lower latency.

That latency is a product of a decision algorithm known as the “critical path”, the shortest and most efficient sequence of linked ML models required to go from input — the user’s message – to output – the bot’s response. So what is essential for deriving the critical path? It all boils down to dependencies, which have long been a defining problem of software development in general.

Improving one application might sometimes force engineers to update the entire system for any connected software architecture. Sometimes, though, an essential update for Application A is incompatible with Applications B, C, and D. This paradigm is known as “dependency hell”. Without attention to detail, machine learning dependencies take that frustration to new depths.

Overcoming dependencies

Machine learning needs to combine with human intuition to improve conversational AI dependencies. By determining which models and which steps in the critical path are necessary to solve the requested issue. Creating a natural language understanding, or NLU can transform unstructured text into machine-actionable information. NLU is a pipeline created through many ML models that correct for potential typos, recognise key entities, separate the signal from the noise, help figure out the user’s intent, and so on. With this information in hand, unnecessary models downstream can be identified and winnowed out. That aids in predicting a helpful solution to the issue, even before analysing the available solutions.

Newer directions

Chatbots based solely on machine learning are black-box systems that cannot work without vast amounts of curated training data. If they do not immediately understand what you expect them to, they cannot be easily tuned or enriched by the developer. Tech giants are also turning towards linguistic learning, a method that does not use statistical data the same way a machine learning system does. Instead, it is a rule-based system that allows for human oversight and fine-tuning of the rules and responses, allowing much more control over how questions are understood and responses are given.

With linguistic learning, if the chatbot is required to interpret a specific sentence differently, developers can tell it by reprogramming the rules. Whereas in traditional machine learning, the system needs to be convinced to interpret the sentence differently by showing lots of counter-examples. Another method that aids quicker response time is to add more distributed data. Data needs to be available in large quantities and be accurate, classified, readable, and relevant.

No one knows what conversational AI might look like in the next ten years. Nevertheless, the need to optimise chatbots is known to make room for future progress. Breakthroughs in artificial intelligence result from many minor, incremental improvements to existing models and techniques. A successful chatbot requires work, and as voice becomes a standard consumer interface, chatbots will need to evolve from pure text to a blend of inputs and outputs.

If you liked reading this, you might like our other stories

How Chatbots Can Enhance Customer Experience Using NLP

How is Conversational AI Powering Marketing and Sales